Table of Contents

- Developing Wolframe Server Applications

- Foreword

- 1. Introduction

- 2. Installation via binary packages

- 3. Configuration

- 4. AAAA

- 5. Data processing

- 5.1. Processor Configuration

- 5.2. Application Server Requests

- 5.3. Command handler

- 5.3.1. The standard command handler

- Introduction

- Example configuration

- Example command description

- Command description language

- Keywords

- Simple document map

- Command with action prefix

- Explicit function name declaration

- Returned document declaration

- Returned document meta data

- Skipping the document validation

- Return a standalone document

- Explicit filter definitions for a command

- Authorization checks

- Adding parameters from the execution context

- Using brackets

- Overview

- 5.4. Functions

- 5.4.1. Transactions in TDL

- Introduction

- Some internals

- Configuration

- Language description

- Subroutines

- Transaction function declarations

- Main processing instructions

- Preprocessing instructions

- Selector path

- Referencing Database Results

- Naming database results

- Referencing Subroutine Parameters

- Constraints on database results

- Rewriting error messages for the client

- substructures in the result

- Explicit sefinition of elements in the result

- Database specific code

- Subroutine templates

- Includes

- Auditing

- 5.4.2. Functions in .NET

- 5.4.3. Functions in python

- 5.4.4. Functions in Lua

- 5.4.5. Functions in native C++

- 5.5. Forms

- 5.6. Filters

- 5.7. Testing and defect handling

- Glossary

- Index

- A. GNU General Public License version 3

- Wolframe Clients

- 1. Introduction

- 2. Clients with PHP

- 3. Clients with .NET (C#)

- 4. Clients with Qt

- Index

- Wolframe Installation from Source

- 1. Installation from source

- 1.1. Source Releases

- 1.2. Building on Unix systems

- 1.2.1. Prerequisites

- 1.2.2. Basic build instructions

- 1.2.3. GCC compiler

- 1.2.4. clang compiler

- 1.2.5. Intel compiler

- 1.2.6. Using ccache and distcc

- 1.2.7. Platform-specific build instructions

- 1.2.8. Boost

- 1.2.9. Secure Socket Layer (SSL)

- 1.2.10. SQLite database support

- 1.2.11. PostgreSQL database support

- 1.2.12. Oracle database support

- 1.2.13. XML filtering support with libxml2 and libxslt

- 1.2.14. XML filtering support with Textwolf

- 1.2.15. JSON filtering support with cJSON

- 1.2.16. Scripting support with Lua

- 1.2.17. Scripting support with Python

- 1.2.18. Printing support with libhpdf

- 1.2.19. Image processing with FreeImage

- 1.2.20. zlib and libpng

- 1.2.21. Support for ICU

- 1.2.22. Internationalization support with gettext

- 1.2.23. Authentication support with PAM

- 1.2.24. Authentication support with SASL

- 1.2.25. Testing Wolframe

- 1.2.26. Testing with Expect

- 1.2.27. Building the documentation

- 1.2.28. Installation

- 1.2.29. Manual dependency generation

- 1.2.30. Creating source tarballs

- 1.2.31. Building the wolfclient

- RedHat/Centos/Scientific Linux 5 and similar Linux distributions

- RedHat/Centos/Scientific Linux 6 and 7 or similar Linux distributions

- Fedora 19 and 20 and similar distributions

- Debian 6 and 7

- Ubuntu 10.04.1 and 12.04

- Ubuntu 13.10 and 14.04

- openSUSE 12.3, SLES and similar Linux distributions

- openSUSE 13.1

- ArchLinux

- Slackware

- FreeBSD 8 and 9

- FreeBSD 10

- NetBSD

- OpenIndiana 151a8

- Solaris 10

- 1.3. Building on Windows systems (the NMAKE way)

- 1.3.1. Prerequisites

- 1.3.2. Basic build instructions

- 1.3.3. Using ccache and distcc

- 1.3.4. Boost

- 1.3.5. Secure Socket Layer (SSL)

- 1.3.6. SQLite database support

- 1.3.7. PostgreSQL database support

- 1.3.8. Oracle database support

- 1.3.9. XML filtering support with libxml2 and libxslt

- 1.3.10. XML filtering support with Textwolf

- 1.3.11. JSON filtering support with cJSON

- 1.3.12. Scripting support with Lua

- 1.3.13. Scripting support with Python

- 1.3.14. Printing support with libhpdf

- 1.3.15. Image processing with FreeImage

- 1.3.16. zlib and libpng

- 1.3.17. Support for ICU

- 1.3.18. Testing Wolframe

- 1.3.19. Testing with Expect

- 1.3.20. Building the documentation

- 1.3.21. Building the wolfclient

- Wolframe Server Extension Modules

Wolframe Application Development Manual

Copyright © 2010 - 2014 Project Wolframe

Commercial Usage. Licensees holding valid Project Wolframe Commercial licenses may use this file in accordance with the Project Wolframe Commercial License Agreement provided with the Software or, alternatively, in accordance with the terms contained in a written agreement between the licensee and Project Wolframe.

GNU General Public License Usage. Alternatively, you can redistribute this file and/or modify it under the terms of the GNU General Public License as published by the Free Software Foundation, either version 3 of the License, or (at your option) any later version.

Wolframe is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License for more details.

You should have received a copy of the GNU General Public License along with Wolframe. If not, see http://www.gnu.org/licenses/

If you have questions regarding the use of this file, please contact Project Wolframe.

Aug 29, 2014 version 0.0.3

Table of Contents

- Foreword

- 1. Introduction

- 2. Installation via binary packages

- 3. Configuration

- 4. AAAA

- 5. Data processing

- 5.1. Processor Configuration

- 5.2. Application Server Requests

- 5.3. Command handler

- 5.3.1. The standard command handler

- Introduction

- Example configuration

- Example command description

- Command description language

- Keywords

- Simple document map

- Command with action prefix

- Explicit function name declaration

- Returned document declaration

- Returned document meta data

- Skipping the document validation

- Return a standalone document

- Explicit filter definitions for a command

- Authorization checks

- Adding parameters from the execution context

- Using brackets

- Overview

- 5.4. Functions

- 5.4.1. Transactions in TDL

- Introduction

- Some internals

- Configuration

- Language description

- Subroutines

- Transaction function declarations

- Main processing instructions

- Preprocessing instructions

- Selector path

- Referencing Database Results

- Naming database results

- Referencing Subroutine Parameters

- Constraints on database results

- Rewriting error messages for the client

- substructures in the result

- Explicit sefinition of elements in the result

- Database specific code

- Subroutine templates

- Includes

- Auditing

- 5.4.2. Functions in .NET

- 5.4.3. Functions in python

- 5.4.4. Functions in Lua

- 5.4.5. Functions in native C++

- 5.5. Forms

- 5.6. Filters

- 5.7. Testing and defect handling

- Glossary

- Index

- A. GNU General Public License version 3

List of Tables

- 3.1. Windows service configuration settings

- 3.2. Unix daemon configuration settings

- 3.3. Global server settings

- 3.4. Listen settings

- 3.5. ListenSSL settings

- 3.6. Restrictions settings

- 3.7. Log message types

- 3.8. LoadModules configuration settings

- 3.9. Global Settings

- 3.10. PostgreSQL database configuration settings

- 3.11. SSL configuration settings

- 3.12. SSL modes

- 3.13. Sqlite3 database configuration settings

- 3.14. Oracle database configuration settings

- 4.1. Authentication configuration settings

- 5.1. Options

- 5.2. Marshalling Tags

- 5.3. Attributes of assembly declarations

- 5.4. Method

- 5.5. List of Atomic Data Types

- 5.6. Methods of 'datetime'

- 5.7. Methods of 'datetime'

- 5.8. Filter interface iterator elements

- 5.9. Method

- 5.10. Data forms declared by DDL

- 5.11. Data forms returned by functions

- 5.12. Document

- 5.13. Logger functions

- 5.14. Global functions

- 5.15. Element attributes in simpleform

The Wolframe project was started in 2010. The goal was to create a platform for fully customizable business applications that can be hosted in modern system environments.

This manual introduces the architecture of Wolframe and explains how to build client/server applications with it. After reading this you should be able to create an application on your own.

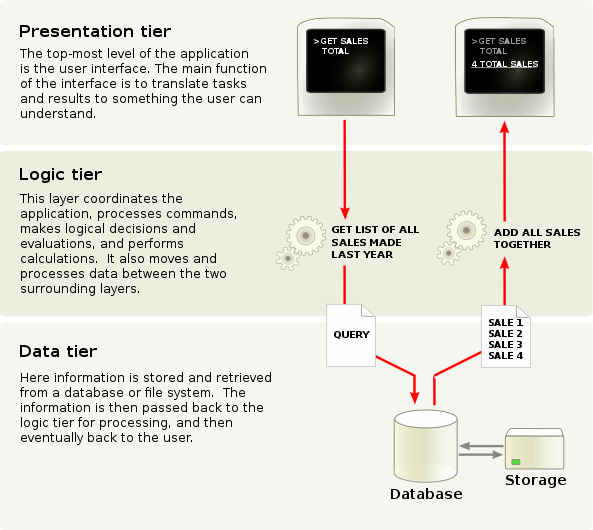

First we describe the overall architecture and the data flow in a Wolframe application.

Wolframe is a 3-tier application server.

The presentation tier of Wolframe is implemented as a thin client. It maps the presentation of the application from the request answers it gets from the server. For some clients the data describing this mapping can also be loaded from the server when connecting to it. The whole processing ot the application is made by the server.

The logic tier of Wolframe defines the processing of application server requests and the rules for access control with the configurable mechanisms for authorization.

A client that logs in to the system passes an authentication procedure. The resulting authentication defines the privileges to execute functions or accessing resources (authorization). The chapter AAAA (Authentication,Authorization,Auditing and Accounting) will introduce the several aspects covered by Wolframe besides data processing.

A client that passed authentication can send application server requests to the server. A request consists of a command name plus a structured content also called document. The server returns a single document to the presentation tier as answer. Many different programming/scripting languages are supported to define the input/output mapping between the layers. Wolframe introduces three concepts as data processing building blocks for handling the server requests:

Filters: Filters are transforming serialized input data (XML,JSON,CSV,etc.) to a unified serialization of hierarchically structured data and to serialize any form of processed data for output. Filters are implemented as loadable modules (e.g. XML filter based on libxml2, JSON filter based on cJSON) or as scripts based on a filter module (XSLT filter script for rewriting input or output)

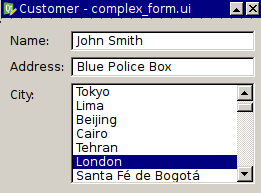

Forms: Forms are data structures defined in a data definition language (DDL). Forms are used to validate and normalize input (XML validation, token normalization, structure definition). The recommended definition of a command in the logic tier has a form to validate its input and a form to validate its output before returning it to the caller.

Functions: Functions delegate processing to the data tier (transactions) or they are simple data transformations or they serve as interface to integrate with other environments (e.g. .NET). Functions have a unique name and are called with a structure as argument and a structure as result. Functions can call other functions for delegation, e.g. a transaction definition can call a .NET function for preprocessing its input or a .NET function can call a Python function to do parts of the processing.

The chapter data processing will describe these building blocks.

The data tier of Wolframe defines the interface to the databases of the application. A transaction description is passed as a complete data structure to the database that returns the result of the transaction. The logic tier builds the result data structure out of this result and completes other actions defined as part of the transaction (like audit) before invoking the completion of the transaction in the data tier with a commit or rollback. All databases of the data tier are integrated with the same interface into the server. Nevertheless there is no unified database language involved and transactions can use proprietary language constructs of the underlying database. Wolframe supports many databases like for example PostgreSQL, Sqlite and Oracle and others can be added by just implementing the database interface as a loadable module.

Table of Contents

This section describes how to install the Wolframe application via packages on various operating systems.

Linux distributions are currently built on the Open Build Server (http://openbuildservice.org) and on a bunch of virtual machines.

The resulting packages and the repository metadata is hosted on Sourceforge (http://sourceforge.net).

The packages are always build with the default system compiler, which is currently GNU gcc.

Packages for proprietary software (like the Oracle database module) have to be built manually, they can not be distributed as binary packages due to license problems.

wolframe-0.0.3 .x86_64.rpm: contains the Wolframe core server with minimal 3rd party software requirements

wolframe-sqlite3-0.0.3 .x86_64.rpm: the database module for Sqlite3 databases

wolframe-postgresql-0.0.3 .x86_64.rpm: the database module for PostgreSQL databases

wolframe-libxml2-0.0.3 .x86_64.rpm: filtering module for XML and XSLT (using libxml2/libxslt)

wolframe-textwolf-0.0.3 .x86_64.rpm: filtering module for XML (using textwolf)

wolframe-cjson-0.0.3 .x86_64.rpm: filtering module for JSON (using cJSON)

wolframe-pam-0.0.3 .x86_64.rpm: authentication module for PAM

wolframe-sasl-0.0.3 .x86_64.rpm: authentication module for SASL

wolframe-python-0.0.3 .x86_64.rpm: language bindings for Python

wolframe-lua-0.0.3 .x86_64.rpm: language bindings for Lua

wolframe-libhpdf-0.0.3 .x86_64.rpm: printing module using libhpdf

wolframe-freeimage-0.0.3 .x86_64.rpm: image manipuation module using FreeImage

wolframe-libclient-0.0.3 .x86_64.rpm: C/C++ client library

wolframe-client-0.0.3 .x86_64.rpm: command line tool

wolfclient-0.0.4 .x86_64.rpm: Wolframe graphical frontend

Installing the packages via repositories is usually the prefered way.

First install the repository file for the corresponding distribution (as example we choose Centos 6):

cd /etc/yum.repos.d wget http://sourceforge.net/projects/wolframe/files/repositories/CentOS-6/wolframe.repo

You can list all available Wolframe packages with:

yum search wolframe

You install the main Wolframe package with:

yum install wolframe

You have to accept the signing key:

Retrieving key from http://sourceforge.net/projects/wolframe/files/repositories/CentOS-6/repodata/repomd.xml.key Importing GPG key 0x9D404026: Userid: "home:wolframe_user OBS Project <home:wolframe_user@build.opensuse.org>" From : http://sourceforge.net/projects/wolframe/files/repositories/CentOS-6/repodata/repomd.xml.key Is this ok [y/N]: y

You can start the service with:

service wolframed start

respectively

systemctl start wolframed

on newer Fedora systems.

wolframe_0.0.3 _amd64.deb: contains the Wolframe core server with minimal 3rd party software requirements

wolframe_sqlite3-0.0.3 _amd64.deb: the database module for Sqlite3 databases

wolframe_postgresql-0.0.3 _amd64.deb: the database module for PostgreSQL databases

wolframe-libxml2_0.0.3 _amd64.deb: filtering module for XML and XSLT (using libxml2/libxslt)

wolframe_textwolf_0.0.3 _amd64.deb: filtering module for XML (using textwolf)

wolframe-cjson_0.0.3 _amd64.deb: filtering module for JSON (using cJSON)

wolframe-pam_0.0.3 _amd64.deb: authentication module for PAM

wolframe-sasl_0.0.3 _amd64.deb: authentication module for SASL

wolframe-python_0.0.3 _amd64.deb: language bindings for Python

wolframe-lua_0.0.3 _amd64.deb: language bindings for Lua

wolframe-libhpdf_0.0.3 _amd64.deb: printing module using libhpdf

wolframe-freeimage_0.0.3 _amd64.deb: image manipuation module using FreeImage

wolframe-libclient_0.0.3 _amd64.deb: C/C++ client library

wolframe-client_0.0.3 _amd64.deb: command line tool

wolfclient_0.0.4 _amd64.deb: Wolframe graphical frontend

Installing the packages via repositories is usually the prefered way.

Note: Some older versions of Ubuntu (like Ubuntu 12.04 LTS, 10.04 LTS or Debian 6) have problems to download the metadata files from Sourceforge. If you get messages like:

W: Failed to fetch http://sourceforge.net/projects/wolframe/files/repositories/Ubuntu-12.04_LTS/Release.gpg Got a single header line over 360 chars Err http://sourceforge.net Packages

then you have to download the binaries manually.

Add a new repository file /etc/apt/sources.list.d/wolframe.list

which contains:

deb http://sourceforge.net/projects/wolframe/files/repositories/Ubuntu-14.04_LTS/ /

(as example we choose Ubuntu 14.04).

Download the signing key:

wget http://wolframe.net/Release.key

Verify that the key then add it with:

apt-key add - < Release.key

Update the repository with:

apt-get update

You can list all available Wolframe packages with:

apt-cache search wolframe

You install the main Wolframe package with:

apt-get install wolframe

To start the Wolframe service you have to

edit the file /etc/default/wolframe

and enable the wolframe daemon there:

RUN=yes

You can start the service now with:

service wolframed start

wolframe-0.0.3 .x86_64.rpm: contains the Wolframe core server with minimal 3rd party software requirements

wolframe-sqlite3-0.0.3 .x86_64.rpm: the database module for Sqlite3 databases

wolframe-postgresql-0.0.3 .x86_64.rpm: the database module for PostgreSQL databases

wolframe-libxml2-0.0.3 .x86_64.rpm: filtering module for XML and XSLT (using libxml2/libxslt)

wolframe-textwolf-0.0.3 .x86_64.rpm: filtering module for XML (using textwolf)

wolframe-cjson-0.0.3 .x86_64.rpm: filtering module for JSON (using cJSON)

wolframe-pam-0.0.3 .x86_64.rpm: authentication module for PAM

wolframe-sasl-0.0.3 .x86_64.rpm: authentication module for SASL

wolframe-python-0.0.3 .x86_64.rpm: language bindings for Python

wolframe-lua-0.0.3 .x86_64.rpm: language bindings for Lua

wolframe-libhpdf-0.0.3 .x86_64.rpm: printing module using libhpdf

wolframe-freeimage-0.0.3 .x86_64.rpm: image manipuation module using FreeImage

wolframe-libclient-0.0.3 .x86_64.rpm: C/C++ client library

wolframe-client-0.0.3 .x86_64.rpm: command line tool

wolfclient-0.0.4 .x86_64.rpm: Wolframe graphical frontend

Note: This is currently not working perfectly and some steps have to be done manually.

First we add the Wolframe repository for the corresponding distribution (as example we choose OpenSUSE 13.1):

zypper addrepo http://sourceforge.net/projects/wolframe/files/repositories/openSUSE-13.1/wolframe.repo

You may get the following error:

/var/adm/mount/AP_0xmiyYP3/projects/wolframe/files/repositories/openSUSE-13.1/wolframe.repo: Line 1 is missing '=' sign Is it a .repo file? See http://en.opensuse.org/Standards/RepoInfo for details.

Try to download the repo file by hand and install it by hand:

wget http://sourceforge.net/projects/wolframe/files/repositories/openSUSE-13.1/wolframe.repo zypper addrepo wolframe.repo

Now refresh your repositories with:

zypper refresh

If you get the following message

File 'repomd.xml' from repository 'Wolframe Project (openSUSE-13.1)' is unsigned, continue? [yes/no] (no): yes

the signing key could not be downloaded from SourceForge. Accept it in this case anyway.

If you get the following message

File './repodata/ea7cb8d9a0caa2c3d8977919be124accdf55c6b8952ddee72f1b48f4decb0644-primary.xml.gz' not found on medium 'http://sourceforge.net/projects/wolframe/files/repositories/openSUSE-13.1/' Abort, retry, ignore? [a/r/i/? shows all options] (a): u^H Invalid answer ''. [a/r/i/? shows all options] (a): ? a - Skip retrieval of the file and abort current operation. r - Try to retrieve the file again. i - Skip retrieval of the file and try to continue with the operation without the file. u - Change current base URI and try retrieving the file again. [a/r/i/? shows all options] (a): u

the Sourceforge redirect didn't work and you have to force the baseURL to be a SourceForge mirror like:

New URI: http://freefr.dl.sourceforge.net/project/wolframe/repositories/openSUSE-13.1/

You can list all available Wolframe packages with:

zypper se wolframe zypper se wolfclient

You install the main Wolframe package with:

zypper install wolframe

You can start the service with:

service wolframed start

respectively

systemctl start wolframed

on newer openSUSE systems.

Wolframe is currently only available as two monolithic packages:

wolframe-0.0.3 .x86_64.rpm: contains the Wolframe core server with all modules for 3rdParty software included,

wolfclient-0.0.4 .x86_64.rpm: Wolframe graphical frontend

You can use the packages from http://sourceforge.net/projects/wolframe/files/wolframe-binaries/ directly.

First add the following section to /etc/pacman.conf:

[wolframe] SigLevel = Optional DatabaseRequired Server = http://sourceforge.net/projects/wolframe/files/repositories/ArchLinux/$arch

Fetch and verify the sigining key, import and locally sign the key:

wget http://wolframe.net/Release.key pacman-key --add Release.key pacman-key --lsign 9D404026

Alternatively you can also disable the verification of the signature of the database by removing 'DatabaseRequired' from the 'SigLevel' option.

Update the repository data with:

pacman -Syy

You can list all available Wolframe packages with:

pacman -Sl wolframe

You install the main Wolframe package with:

pacman -S wolframe

You can start the service with:

systemctl start wolframed

You can also customize your build by downloading the build files from the AUR at https://aur.archlinux.org/packages/?O=0&K=wolframe and customize them to your needs.

For instance:

yaourt -G wolframe cd wolframe makepkg

Wolframe is currently only available as two monolithic packages:

wolframe-0.0.3 .x86_64.rpm: contains the Wolframe core server with all modules for 3rdParty software included,

wolfclient-0.0.4 .x86_64.rpm: Wolframe graphical frontend

Download the package file (we picked 64-bit Slackware 14 for example):

wget http://sourceforge.net/projects/wolframe/files/wolframe-binaries/0.0.3 /Slackware-14/x86_64/wolframe-0.0.3 -x86_64.tgz

You install the Wolframe package with:

installpkg wolframe-0.0.3 -x86_64.tgz

You can start the service with:

/etc/rc.d/rc.wolframed start

Download the package file (we choose 64-bit FreeBSD 9 for example):

wget http://sourceforge.net/projects/wolframe/files/wolframe-binaries/0.0.3 /FreeBSD-9/x86_64/wolframe-0.0.3 -x86_64.tgz

You install the Wolframe package with:

pkg_add wolframe-0.0.3 -x86_64.tgz

The FreeBSD packages contain the whole server and the whole client respectively.

You can start the service with:

/usr/local/etc/rc.d/wolframed onestart

To start the Wolframe service at system boot time

you have to edit the file /etc/rc.conf

and enable the wolframe daemon there with:

wolframed_enable="YES"

You can start the service now with:

service wolframed start

Download the package file (we choose 64-bit NetBSD 6 for example):

wget http://sourceforge.net/projects/wolframe/files/wolframe-binaries/0.0.3 /NetBSD-6/x86_64/wolframe-0.0.3 -x86_64.tgz

You install the Wolframe package with:

pkg_add wolframe-0.0.3 -x86_64.tgz

The NetBSD packages contain the whole server and the whole client respectively.

You can start the service with:

/usr/pkg/share/examples/rc.d/wolframed onestart

To start the Wolframe service at system boot time

you have to edit the file /etc/rc.conf

and enable the wolframe daemon there with:

wolframed=YES

Copy the example startup script to the final place:

cp /usr/pkg/share/examples/rc.d/wolframed /etc/rc.d/

You can start the service now with:

/etc/rc.d/wolframed

Download the package file for SPARC Solaris 10 (the only one we can build at the moment):

wget http://sourceforge.net/projects/wolframe/files/wolframe-binaries/0.0.3 /Solaris-10/sparc/wolframe-0.0.3 -sparc-5.10.pkg.Z

You install the Wolframe package with:

uncompress wolframe-0.0.3 -sparc-5.10.pkg.Z pkgadd -d wolframe-0.0.2-sparc-5.10.pkg all

The Solaris packages contain the whole server and the whole client respectively.

The package installs to the /opt/csw directory tree.

Install the CSW toolchain (http://www.opencsw.org) and the minimally required packages:

pkgadd -d http://get.opencsw.org/now pkgutil --install CSWlibgcc CSWlibssl1

Depending on the third party software you plan to use you also have to install those packages, for instance to run a Sqlite3 database you have to install 'CSWsqlite3'.

You can start the service now with:

/etc/opt/csw/init.d/wolframed start

Table of Contents

This chapter describes the configuration of the wolframe server besides the data processing described in the section data processing and the AAAA backends described in the section AAAA.

The service configuration is depending on the platform you run the Wolframe server. We show the configuration for Windows and Unix in different sections:

The service configuration for Windows is defined in the section

Service. The following table describes the settings

in the service configuration for Windows. The service configuration

for Windows is only read at installation time. Changing them later

in the configuration file has no effect.

Table 3.1. Windows service configuration settings

| Name | Arguments | Description |

|---|---|---|

| ServiceName | string | Parameter for service registration. Defines the name of the service |

| DisplayName | string | Parameter for service registration. Defines the display name of the service |

| Description | string | Parameter for service registration. Defines the description of the service |

Example configuration:

Service {

ServiceName wolframe

DisplayName "Wolframe Daemon"

Description "Wolframe Daemon"

}

The daemon configuration for Unix systems is defined in the section

Daemon. The following table describes the settings

in a daemon configuration:

Table 3.2. Unix daemon configuration settings

| Name | Arguments | Description |

|---|---|---|

| User | identifier | Defines the name of the user the Wolframe server should run as. |

| Group | identifier | Defines the name of the group of the user the Wolframe server should run as. |

| PidFile | filepath | Defines the path to the file used as pid file |

Example configuration:

Daemon {

User wolframe

Group wolframe

PidFile /var/run/wolframed.pid

}

The server configuration is defined in the Server

section. The server specifies the set of sockets a client can

connect to. It defines rules for how and from where a client

can connect and the properties of the connection.

It also defines some global settings listed in the following table:

Table 3.3. Global server settings

| Name | Description |

|---|---|

| MaxConnections | The maximum number of total simultaneous connections (clients). If not specified the default is used. The default is the operating system limit. |

| Threads | Number of threads for serving client connections. |

Here is an example configuration of the server global settings:

Server

{

MaxConnections 12

Threads 7

}

The server has two types of sockets to configure in the

sections Listen and ListenSSL.

ListenSSL is describing a secure connection

with a transport layer encryption based on SSL/TLS.

Listen on the other hand is describing a plain

TCP/IP connection.

In the following two sections they are introduced:

In the subsections named Listen of the server

configuration we define sockets providing plain connections based

on TCP/IP. The following table describes the attributes you can set

for plain TCP/IP connections:

Table 3.4. Listen settings

| Name | Description |

|---|---|

| Address | Listening address (IPv4 or IPv6) of the server. '127.0.0.1' and '::1' stand for the loopback address (IPv4 an IPv6 respectively). The listener wildcards '*' or '0.0.0.0' (IPv4) or '::' (IPv6) are also accepted. |

| Port | Connection port. Ports 7649-7671 and 7934-7966 are unassigned according to IANA (last updated 2010-03-11). The default ports are 7661 for unencrypted connections and 7961 for SSL connections. Note that 7654 seems to be used by Winamp. |

| Identifier | Identifier that can be referenced in authorization functions to classify connections and to define authorization based on it. |

| MaxConnections | (optional) The maximum number of simultaneus connections for this socket. |

| Restrictions | (optional) Defines the subsection containing IP restrictions on the connection. If not defined, the connection is allowed from everywhere. The configuration of IP restrictions will be defined in the section IP restrictions. |

The following Listen configuration shows an example plain TCP/IP

connection definition:

Server

{

Listen

{

Address localhost

Port 7661

Identifier "Interface 1"

Restrictions {

allow 192.168.201.0/24

}

}

}

In the subsections named ListenSSL of the

server configuration we define sockets providing secured connections

with full transport layer encryption based on SSL/TLS.

The following table describes the attributes you can set for secured

connections. The first five attributes are the same as for

sockets configured as plain TCP/IP (Listen)

as shown before:

Table 3.5. ListenSSL settings

| Name | Description |

|---|---|

| Address | Listening address (IPv4 or IPv6) of the server. '127.0.0.1' and '::1' stand for the loopback address (IPv4 an IPv6 respectively). The listener wildcards '*' or '0.0.0.0' (IPv4) or '::' (IPv6) are also accepted. |

| Port | Connection port. Ports 7649-7671 and 7934-7966 are unassigned according to IANA (last updated 2010-03-11). The default ports are 7661 for unencrypted connections and 7961 for SSL connections. Note that 7654 seems to be used by Winamp. |

| Identifier | Identifier that can be referenced in authorization functions to classify connections and to define authorization based on it. |

| MaxConnections | The maximum number of simultaneus connections for this port. |

| Restrictions | Defines the subsection containing IP restrictions on the connection. If not defined, the connection is allowed from everywhere. The configuration of IP restrictions will be defined in the section IP restrictions. |

| Certificate | File with the SSL certificate |

| Key | File with the SSL key |

| CAdirectory | Directory holding the CA certificate files. |

| CAchainFile | SSL CA chain file |

| Verify | ON/OFF switch to enabe/disable client certificate verification. |

The following configuration shows an example ListenSSL

definition:

Server

{

ListenSSL

{

Address localhost

Port 7961

Identifier "Interface 1"

MaxConnections 2

Certificate SSL/wolframed.crt

key SSL/wolframed.key

CAchainFile SSL/CAchain.pem

Verify Off

}

}

IP restrictions are defined as sub section restrictions

of the socket configurations (Listener and ListenerSSL)

in the server configuration.

Table 3.6. Restrictions settings

| Name | Argument | Description |

|---|---|---|

| Allow | IP address with optional network mask | Define an IP or network address

as allowed to connect from, if not explicitely

defined by a Deny directive.

If no allow is specified then

all IPs are allowed to connect from, if not

explicitely excluded by a deny

directive. So no allow is equivalent

to allow all

|

| Deny | IP address with optional network mask | Define an IP or network address

as forbidden to connect from. If a

deny directive refers to

an IP explictely defined or part of an

allow then the deny

is stronger and overrides the allow

declaration.

|

The logger configuration is defined in the Logging

section. The logger is defined for different backends.

We can define several backends for the logger.

Each backend defines the level (verbosity) of logging. For the log levels we use the type of the log message. The types of log messages can be listed in ascending order of their severity. Setting the log level to a type of log message means that the system logs all messages with equal or higher severity than the specified type. The log message types are listed in the following table. They are listed in ascending order of severity:

Table 3.7. Log message types

| Name | Description |

|---|---|

| DATA2 | Processing data messages, not truncated |

| DATA | Processing data messages with big chunks truncated |

| TRACE | Processing trace messages |

| DEBUG | Debug messages |

| INFO | Information messages |

| NOTICE | Important information messages |

| WARNING | Warning messages |

| ERROR | Processing error messages |

| SEVERE | Severe errors that should be analyzed. |

| CRITICAL | Critical errors that have to notified. |

| ALERT | Critical errors that have to be escalated to a person responsible immediately. |

| FATAL | Fatal errors that cause the server to shut down. |

In the following sub sections we list the different backends and how they can be configured.

For defining the backend to log to console (stderr), we

have to configure a subsection stderr of the

Logging section. For console logging we just

can define the logging level with level.

Example configuration:

Logging

{

Stderr {

Level INFO

}

}

For defining the backend to log to a file, we

have to configure a subsection LogFile of the

Logging section. For logging to file we

can define the logging level with Level

and the file name with Filename.

Example configuration:

Logging

{

LogFile {

Filename /var/log/wolframed.log

Level NOTICE

}

}

For defining the backend to log to syslog, we

have to configure a subsection Syslog of the

Logging section. For logging to syslog we

can define the logging level with Level,

the syslog facility with Facility

and the identifier with Ident.

Example configuration:

Logging

{

Syslog {

Ident wolframed

Facility LOCAL2

Level INFO

}

}

On Windows we can also log to eventlog.

For defining the backend to log to eventlog, we

have to configure a subsection Eventlog of the

Logging section. For logging to eventlog we

can define the logging level with Level,

the eventlog source with Source

and the identifier with Name.

Example configuration:

Logging

{

Eventlog {

Name Wolframe

Source wolframed

Level WARNING

}

}

The configuration of modules loaded by the server for processing

are defined in the LoadModules section.

The following table describes the settings in the modules:

Table 3.8. LoadModules configuration settings

| Name | Arguments | Description |

|---|---|---|

| Directory | path | Path to use as base path for relative module paths specified with 'Module'. If specified as relative path, it is relative to the directory containing the main configuration file. If not specified, the default module directory of Wolframe is used (for example /usr/lib64/wolframe/modules). |

| Module | path | Define a module to load by path. If the setting 'directory' was not specified then the path is relative to the library directory of Wolframe (subdirectory 'wolframe' of the user library directory) |

Example configuration:

LoadModules

{

Directory /usr/lib/wolframe

Module mod_db_postgresql

Module mod_auth_textfile

Module mod_filter_libxml2

}

The following table describes the global settings you can configure:

Table 3.9. Global Settings

| Name | Arguments | Description |

|---|---|---|

| ServerTokens | One of None, ProductOnly, Major, Minor, Revision, Build, OS | Define what to print in the initial greeting of the protocol (first message of the server). Every token above prints more information as the previous one, staring from 'None' which prints nothing, to 'OS' which prints 'Wolframe version 1.4.5.22, Linux, Ubuntu 14.04'. The default is 'None'. |

| ServerSignature | string | Define the string to be printed in the initial greeting of the protocol (first message of the server). The server signature gets added to the greeting string in brackets, for instance 'Wolframe version 1.4.5.22, Linux, Ubuntu 14.04 (CRM)'. The default is an empty string. |

Example configuration:

ServerTokens OS ServerSignature "CRM"

The server would send the following greeting string:

Wolframe version 1.4.5, Linux, Ubuntu 14.04 (CRM)

The Databases for Wolframe are configured in the Database

section of the configuration.

This section explains how to configure a Postgres database.

In order to use a Postgres database in Wolframe you have to configure the loading of the module mod_db_postgresql in the LoadModules section of your configuration. The addressed Postgres database server must be running and the database and the user configured must have been created before.

The configuration settings for PostgreSQL are splitted in two parts: The database configuration settings and the SSL configuration settings. The following three tables show the PostgreSQL database configuration settings, the PostgreSQL SSL configuration settings and the table with configurable SSL modes:

Table 3.10. PostgreSQL database configuration settings

| Name | Parameter | Description |

|---|---|---|

| Identifier | identifier | Database identifier used to reference this database. |

| Host | host name or IP address | Address of the PostgreSQL database server. Uses the unix domain socket if not defined. |

| Port | number | Port of the PostgreSQL database server. The default is 5432. |

| Database | identifier | Name of the database to connect to |

| User | identifier | User to connect to the database with |

| Password | string | Password to connect to the database with |

| ConnectionTimeout | number | Connection timeout in seconds. The default if not specified is 30 seconds. A value of '0' disables the connection timeout. |

| Connections | number | Maximum number of simoultaneus database connections (size of connection pool). Default if not specified is 4 connections. |

| AcquireTimeout | number | Maximum time allowed to acquire a database connection in seconds |

| StatementTimeout | number | The maximum allowed time to execute a statement in milliseconds. |

Table 3.11. SSL configuration settings

| Name | Parameter | Description |

|---|---|---|

| SslMode | identifier | How a SSL TCP/IP connection should be negotiated with the server. Possible values and their explanation can be found in the SSL Mode table below. |

| SslCert | filename | The file name of the client SSL certificate in case of an SSL connection to the database. |

| SslKey | filename | The file with the secret key used for the client certificate in case of an SSL connection to the database. |

| SslRootCert | filename | The File name of the root SSL CA certificate in case of an SSL connection to the database. |

| SslCRL | filename | The File name of the SSL certificate revocation list (CRL) in case of an SSL connection to the database. |

Table 3.12. SSL modes

| Name | Description |

|---|---|

| disable | Only try a non-SSL connection |

| allow | First try a non-SSL connection. If that fails, try an SSL connection |

| prefer | First try an SSL connection. If that fails, try a non-SSL connection. This is the default. |

| require | Only try an SSL connection. If a root CA file is present, verify the certificate. |

| verify-ca | Only try an SSL connection, and verify that the server. The certificate is issued by a trusted CA. |

| verify-full | Only try an SSL connection, verify that the server certificate is issued by a trusted CA and that the server hostname matches that in the certificate. |

This section explains how to configure a Sqlite database.

In order to use an Sqlite3 database in Wolframe you have to configure the loading of the module mod_db_sqlite3 in the LoadModules section of your configuration. The database file configured must have been created before.

The following table shows the configuration settings for a Sqlite3 database in Wolframe.

Table 3.13. Sqlite3 database configuration settings

| Name | Parameter | Description |

|---|---|---|

| Identifier | identifier | Database identifier used to reference this database. |

| File | filepath | Store the SQLite database into the file specified as argument. This parameter is required and the file must have been created before. |

| Connections | number | Number of simultaneous connections to the database. Specifies the size of the pool of connections used for database transactions. The default number of connections is 4 if not configured. |

| ForeignKeys | yes or no | Setting foreignKeys to yes

enables referential integrity in the database. This is

actually the same as executing 'PRAGMA foreign_keys=true'.

The default is yes. |

| Profiling | yes or no | Shows the SQL commands being executed and their

execution time in milliseconds. Default is no. |

| Extension | filename | Loads the SQLite3 extension module specified as argument. This is useful to load native code into SQLite3 imlementing for instance new database functions. Have a look at the SQLite3 'Run-Time Loadable Extensions' section at http://www.sqlite.org/loadext.html. |

This section explains how to configure an Oracle database.

In order to use an Oracle database in Wolframe you have to configure the loading of the module mod_db_oracle in the LoadModules section of your configuration. The addressed Oracle database server must be running and the database and the user configured must have been created before.

The following table shows the Oracle database configuration settings:

Table 3.14. Oracle database configuration settings

| Name | Parameter | Description |

|---|---|---|

| Identifier | identifier | Database identifier used to reference this database. |

| Host | identifier or string | Address of the Oracle database server |

| Port | number | Port of the Oracle database server, the default is 1521 if not specified |

| Database | identifier | Name of the database to connect to, this is the Oracle SID. |

| User | identifier | User to connect to the database with |

| Password | string | Password to connect to the database with |

| Connections | number | Maximum number of simultaneous database connections (size of connection pool) |

| AcquireTimeout | number | Maximum time allowed to acquire a database connection in seconds |

Table of Contents

AAAA is an acronym for authentication,authorization, accounting and auditing. The different aspects of AAAA in the application are linked together as they all are based on the identity of the user. The identitiy of a user is authenticated after his login. Depending on the identity and the method the user was authenticated, he gets privileges to access resources or execute procedures. Wolframe provides hooks for calling authorization to enforce checks of these privileges. Every request and every transaction can be bound to authorization. Every function executed can use authorization functions to check privileges.

Accounting and auditing functions use the identity of the user to log the actions of a session.

Wolframe defines authentification methods as configurable modules. New modules can be added easily. Authorization and auditing calls are implemented as ordinary functions that can be written in any language that has bindings for Wolframe. A layer inbetween declares what data of the users context is passed to authorization or audit/accounting functions. This makes it easy to enforce rules how to handle sensible login data in a controlled way.

The configuration section AAAA bundles the

definition of mechanisms and resources needed for AAAA.

The following example shows an empty AAAA configuration

section:

AAAA

{

;... configuration for

; authentication,authorization,auditing and accounting

}

The identitiy of a user is authenticated after his login.

The identity and the method he was

The aspect of authentication is configured in the

subsection Authentication of the AAAA section

in the server configuration.

In order to use authentication in Wolframe you have to configure the

loading of the module implementing it. The authentication mech explained

here as example is implemented in the module mod_auth_textfile.

For using it you have to declare the module mod_auth_textfile

in the LoadModules section of your configuration before.

The following table shows the configuration settings for Authentication in Wolframe.

Table 4.1. Authentication configuration settings

| Name | Parameter | Description |

|---|---|---|

| RandomDevice | address or path | Specifies the random device to be used. This configuration setting is optional. The system should have a reasonable default for most applications. |

| Textfile | section | Specifies the block with the configuration for authentication based on a textfile with WOLFRAME-CRAM as authentication mech. |

The following example shows an Authentication

configuration with the Wolframe textfile authentication module

that provides an authentication over the "WOLFRAME-CRAM" protocol:

AAAA

{

Authentication

{

randomDevice /dev/urandom

TextFile

{

identifier "TextFileAuth"

file /var/tmp/wolframe.auth

}

}

Currently only the authentication protocol for

WOLFRAME-CRAM is implemented. The

client can choose between no authentication

(if configured to allow no authentication)

or WOLFRAME-CRAM. You configure

it as shown in the example above. For the setup of a

client see the chapter "Clients".

Authorization in Wolframe has two levels. The first level is authorization based on connection info, e.g. is a client allowed/denied to connect from a certain ip or network. Connection info based authorization happens on connect to the server.

The second level is command authorization based on identity (user attributes, connection characteristics) that is done on execution of a function. Identity based authorization can can be defined for the access of resources. It's is up to us to define the access control matrix

The authorization based on connection info is implemented in the server configuration as IP restrictions (see chapter "IP restrictions").

Authorization

The aspect of authorization is configured in the

subsection Authorization of the AAAA section

in the server configuration.

The only working configuration is the

default Authorization configuration with

the setting to allow access to anybody authenticated

that is not explicitely denied:

AAAA

{

Authorization

{

Authorization {

default allow

}

}

}

The command execution authorization is not implemented yet completely. The idea is to have programs that map authorization function calls to Wolframe function calls. The language to describe these programs is not yet defined. The mechanism to map the authorize requests to the function calls already exists. Authorization based on command execution will be a subject of the next release.

The aspect of auditing and accounting is configured in the

subsection Audit of the AAAA section

in the server configuration. Accounting is a sub aspect of

auditing. The audit (and accounting) calls are implemented as normal

Wolframe function calls. The configuration defines additional parameters

depending on the authentication status for the audit function calls

and resources to be referenced.

The following example shows an Audit

configuration with the setting use the textfile /var/tmp/wolframe.audit

as audit log:

AAAA

{

Audit

{

TextFile

{

file /var/tmp/wolframe.audit

}

}

}

The mechanism of auditing, the calling of audit functions and their declaration in the transaction layer is implemented and tested. The underlaying methods, for example to log to a text file as configured in the example above is not yet implemented, but is subject of the next release.

Table of Contents

- 5.1. Processor Configuration

- 5.2. Application Server Requests

- 5.3. Command handler

- 5.3.1. The standard command handler

- Introduction

- Example configuration

- Example command description

- Command description language

- Keywords

- Simple document map

- Command with action prefix

- Explicit function name declaration

- Returned document declaration

- Returned document meta data

- Skipping the document validation

- Return a standalone document

- Explicit filter definitions for a command

- Authorization checks

- Adding parameters from the execution context

- Using brackets

- Overview

- 5.4. Functions

- 5.4.1. Transactions in TDL

- Introduction

- Some internals

- Configuration

- Language description

- Subroutines

- Transaction function declarations

- Main processing instructions

- Preprocessing instructions

- Selector path

- Referencing Database Results

- Naming database results

- Referencing Subroutine Parameters

- Constraints on database results

- Rewriting error messages for the client

- substructures in the result

- Explicit sefinition of elements in the result

- Database specific code

- Subroutine templates

- Includes

- Auditing

- 5.4.2. Functions in .NET

- 5.4.3. Functions in python

- 5.4.4. Functions in Lua

- 5.4.5. Functions in native C++

- 5.5. Forms

- 5.6. Filters

- 5.7. Testing and defect handling

In the this chapter we will see how application server requests look like. We will have a look at the Wolframe standard command handler that handles the requests and how it is configured. Then we will show how to write programs that declare the functions executing the requests and how you link them to your application. Finally we will describe the tools available in Wolframe for defect handling and testing.

The processing of application server requests is configured in the

section Processor of the server configuration.

The following example shows an empty Processor configuration

section:

Processor

{

;... processing configuration

}

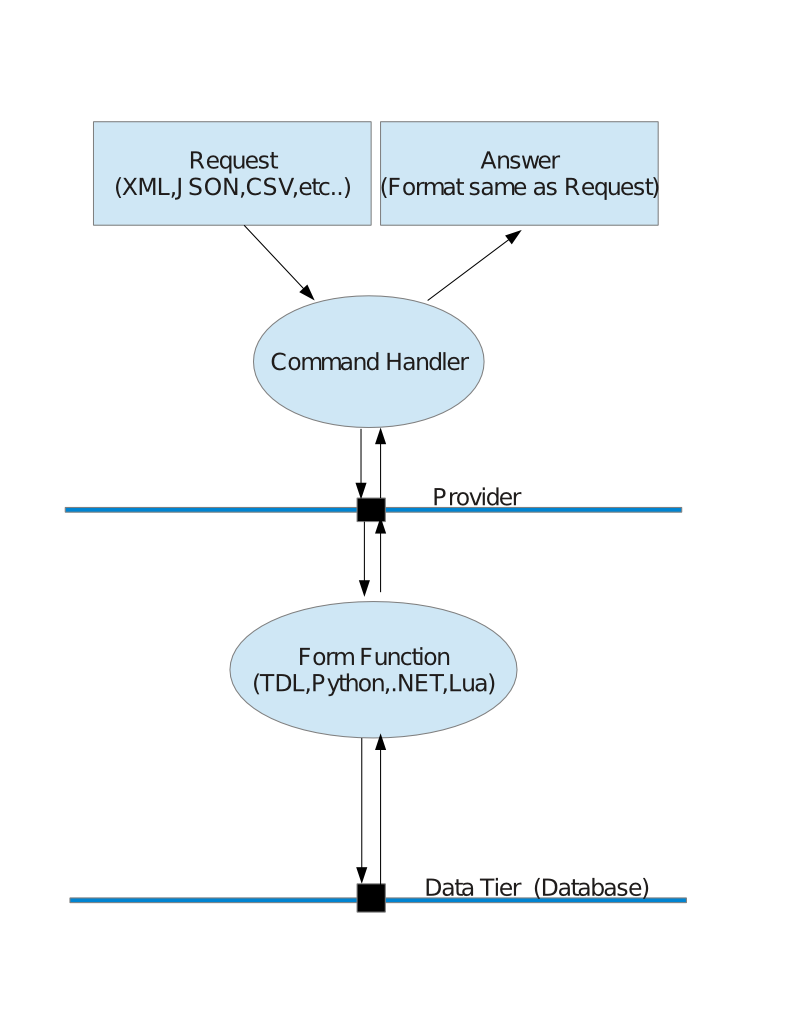

Wolframe application server requests consist of a named command and a structured content (document) as argument.

The following illustration shows the processing of one client request to the server. A call of the Wolframe logic tier gets to a command handler that calls functions given by the provider to perform the transaction requested.

Command handlers define the mapping of server requests to functions to execute. This chapter introduces the standard command handler.

The Wolframe standard command handler is called directmap and named so in the configuration because it only declares a redirection of the commands to functions based on the document type and the command identifier specified by the client in the request.

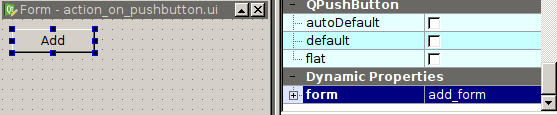

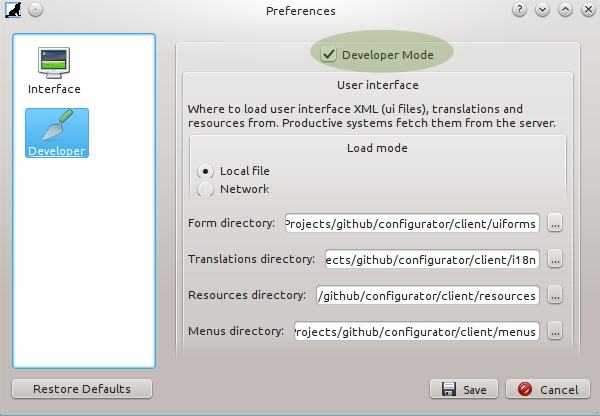

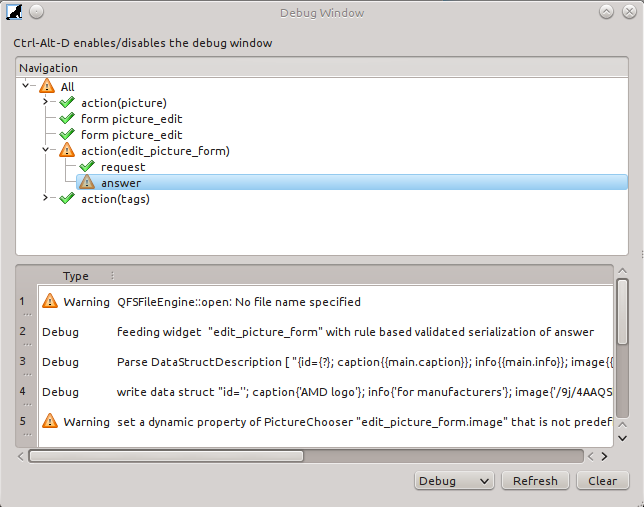

The declarations of the Wolframe Standard Command Handler (directmap) are specified in a program source file with the extension '.dmap' that is declared in the configuration.

The following annotated configuration example

declares (1) a program example.tdl written in the

transaction definition language (TDL)

to contain the function declarations that can be called by

the command handler. It (2) declares the database with

name pgdb to be used as the

database for transactions. It (3) loads a description

example.dmap that will declare the mappings

of commands to the filters used and functions called.

It (4) specifies the filter with name libxml2

to be used for documents of format XML and

(5) the filter with name cjson

to be used for documents of format JSON, if not specified

else in example.dmap.

; Simple Data Processing Configuration Example

Processor

{

; Programs to load:

Program example.tdl ; (1) a program with functions (in TDL)

Database pgdb ; (2) references transaction database

; Command handlers to load:

Cmdhandler

{

Directmap ; the standard command handler

{

Program example.dmap ; (3) description of command mappings

Filter XML=libxml2 ; (4) std filter for XML document format

Filter JSON=cjson ; (5) std filter for JSON document format

}

}

}

The following source example could be one of the example.dmap

in the configuration example introduced above. It defines two commands. The first one links a command "insert" with document type

"Customer" as content to a transaction function "doInsertCustomer". The content is validated

automatically against a form named "Customer" if not explicitly defined else.

The command has no result except that it succeeds or fails. The second example command links a

command "get" with a document type "Employee" to a function "doSelectEmployee".

The input is not validated and the transaction output is validated and mapped through the

form "Employee".

COMMAND insert Customer CALL doInsertCustomer; COMMAND get Employee SKIP CALL doSelectEmployee RETURN Employee;

A command map description file like our example shown consists of instructions started with

COMMAND and terminated by semicolon ';'. The first argument after COMMAND is the name of the

command followed by the name of the document type of the input document. The name of the command

is optional. If not specified the first argument after COMMAND names the input document type.

Conflicts with keywords and names are solved by using strings instead of identifiers. The standard command handler description language has the following keywords:

| COMMAND |

| CALL |

| CONTEXT |

| RETURN |

| SKIP |

| FILTER |

| INPUT |

| OUTPUT |

| AUTHORIZE |

The following example shows the simplest possible declaration. It states that documents with the document type "Document" are forwarded to a function with the same name "Document".

COMMAND Document;

The next example adds a action name to the declaration. The implicit name of the function called is insertDocument:

COMMAND insert Document;

For declaring the function called explicitly like for example a function doInsertDocument we need to declare it with CALL <functionname>:

COMMAND insert Document CALL doInsertDocument;

The document type returned is specified with RETURN <doctype>:

COMMAND process Document RETURN Document;

or with explicit naming of a function called

COMMAND process Document CALL doProcessDocument RETURN Document;

We can define additional document meta data or overload existing document meta or inherited document meta from input or a referenced form in the output. This is done with a comma separated list of attribute assignments in curly brackets after the document type name like:

COMMAND process Document

CALL doProcessDocument

RETURN Document { root = 'doc', schema = 'bla.com/schema' };

If you want to skip the input document validation, either because you are dealing

with legacy software where a strict definition of a schema is not possible or

because the function called has strict typing and validates the input on its own (.NET,C++),

then you can add a declaration SKIP:

COMMAND process Document SKIP CALL doProcessDocument RETURN Document;

The same you can specify for the output with a SKIP

following the RETURN of the output declaration:

COMMAND process Document CALL doProcessDocument RETURN SKIP Document;

For being able to skip validation of output of a processed XML

we have additionally to specify the root element as document

meta data. This defintion can be part of a form declaration

(not used for validation) or it can be specified after

the RETURN SKIP and the document

type identifier in a standard command handler instruction.

The following example shows such a definition with 'list'

as root element defined. Such a command definition makes sense

for strongly typed languages like .NET or native C++ where data

validation can be delagated completely to the strongly typed

structure definition of the called function.

COMMAND process Document

CALL doProcessDocument RETURN SKIP Document {root='list'};

If we want to return a document as standalone

(standalone="yes" in the header in case of XML) without validation,

we have to declare this with explicit document meta data as

RETURN SKIP {standalone='yes',root='root'}.

COMMAND process Document

CALL doProcessDocument

RETURN SKIP {standalone='yes',root='list'};

For most processing it's enough to declare the standard filters in the configuration of the command handler. But in certain cases we want to declare a filter explicitly for a command, for example to preprocess a certain document type with an XSLT filter. Explicitly declared filters always refer to a document format and documents of other formats have to be converted first or they cannot be preprocessed. The conversions mechanisms we will explain in detail later. Explicit filter declarations are done with

FILTER <name>orFILTER INPUT <inputfiltername>orFILTER OUTPUT <outputfiltername>orFILTER INPUT <inputfiltername> OUTPUT <outputfiltername>

Here is an example:

COMMAND process Document FILTER INPUT myXsltInputFilter

CALL doProcessDocument RETURN Document;

We can tag a command to be allowed only after an authorization check. The check denies command execution with an error if the login of the user does not allow the execution of the command. The call is the same as in TDL for example. Authorization checks are triggered by the AUTHORIZE attribute with one or two arguments as follows:

AUTHORIZE <authfunc>orAUTHORIZE <authfunc> <resource>

Wolframe functions that are written in a language other than C++ are usually pure data in / data out functions. So the input document defines the input. But sometimes we need to include data from the user context into processing, for example for inserting or editing some personal data. Wolframe gives us the possibility to include data from the execution context into the input document. We do this with the directive CONTEXT followed by a list of comma ',' separated assignments in curly brackets '{' '}'. The following example adds an element 'uname' that does not exist yet in the input to the input document before execution (after validation). The value of the add 'uname' element is the user name of the user issuing the request.

COMMAND insert UserData CONTEXT { uname = UserName }

CALL doInsertUserData;

This way we keep the processing functions as pure data functions. We are in certain cases able to inject some login dependent data in a controlled way, without exposing an API to all language bindings for being able to access everything from everywhere.

For better readability you can use optional '(' ')' brackets on the arguments of the command declaration:

COMMAND ( process Document )

FILTER INPUT myXsltInputFilter CALL doProcessDocument

RETURN Document;

Each command declaration has as already explained the form

COMMAND <doctype> [OPTIONS] ;orCOMMAND <action> <doctype> [OPTIONS] ;

The following table shows an overview of the elements that can be used in the [OPTIONS] part of the command:

Table 5.1. Options

| Keywords | Arguments | Description |

|---|---|---|

| CALL | Function Name | Names the function to be called for processing the request |

| RETURN | Document Type | Specifies the type of the document returned and forces validation of the output |

| RETURN SKIP | Document Type | Specifies the type of the document returned but skips validation of the output |

| SKIP | (no arguments) | Specifies the input document validation to be skipped |

| FILTER INPUT | Filter Name | Specifies that the filter <Name> should be used as input filter |

| FILTER OUTPUT | Filter Name | Specifies that the filter <Name> should be used as output filter |

| FILTER | Filter Name | Specifies that the filter <Name> should be used both as input and output filter |

| AUTHORIZE | func res | Specifies that the function <func> should be called with the resource <res> to check if the user is allowed to execute the command. |

This chapter describes how functions are linked to the logic tier. It gives an overview on the language bindings available for Wolframe.

For defining database transactions Wolframe introduces a language called TDL (Transaction Definition Language). TDL embeddes the language of the underlaying database (SQL) in a language that defines how sets of elements of input and output are addressed.

This chapter also describes how data types are defined that can be used in data definion languages (DDL) for form desciptions. Forms and their definition will be introduced in a different chapter.

After reading this chapter you should be able to write functions of the Wolframe logic tier on your own.

Be aware that you have to configure a programming language of the logic tier in Wolframe before using it. Each chapter introducing a programming language will have a section that describes how the server configuration of Wolframe has to be extended for its availability.

For the description of transactions Wolframe provides the transaction definition language (TDL) introduced here. Wolframe transactions in TDL are defined as functions in a transactional context. This means that whatever is executed in a transaction function belongs to a database transaction. The transaction commit is executed implicitely on function completion. Errors or a denied authorization or a failing audit operation lead to an abort of the database transaction.

A TDL transaction function takes a structure as input and returns a structure as output. The Wolframe database interface defines a transaction as object where the input is passed to as a structure and the output is fetched from it as a structure.

TDL is a language to describe the building of transaction input and the building of the result structure from the database output. It defines a transaction as a sequence of instructions on multiple data. An instruction is either described as a single embedded database command in the language of the underlying database or a TDL subroutine call working on multiple data.

Working on multiple data means that the instruction is executed for every item of its input set. This set can consist of the set of results of a previous instruction or a selection of the input of the transaction function. A "for each" selector defines the input set as part of the command.

Each instruction result can be declared as being part of the transaction result structure. The language has no flow control based on state variables other than input and results of previous commands and is therefore not a general purpose programming language. But because of this, the processing and termination of the program is absolutely predictable.

As possibility to convert the input data before passing it to the database, the transaction definition language defines a preprocessing section where globally defined Wolframe functions can be called for the selected input. To build an output structure that cannot be modeled with a language without control structures and recursion, TDL provides the possibility to define a function as filter for postprocessing of the result of the transaction function. This way it is for example possible to return a tree structure as TDL function result.

The TDL is - as most SQL databases - case insensitive. For clearness and better readability TDL keywords are written in uppercase here. We recommend in general to use uppercase letters for TDL keywords. It makes the source more readable.

TDL is compiled to a code for a virtual machine. Setting the log level to DATA will print the symbolic representation of the code as log output. The internals of the virtual machine will be discussed in a different chapter of this book.

Each TDL program source referenced has to be declared in the

Processor section of the configuration with

program <sourcefile>.

A TDL program consists of subroutine declarations and exported transaction function declarations. Subroutines have the same structure as transaction function blocks but without pre- and postprocessing and authorization method declarations.

A subroutine declaration starts with the Keyword SUBROUTINE

followed by the subroutine name and optionally some parameter names

in brackets ('(' ')') separated by comma.

The declared subroutine name identifies the function in the scope

of this sourcefile after this subroutine declaration.

The name is not exported and the subroutine not available for other TDL

modules. With includes described later we can reuse code.

The body of the function contains the following parts:

DATABASE <database name list>

This optional definition is restriction the definition and availability of the function to a set of databases. The databases are listed by name separated by comma (','). The names are the database id's defined in your server configuration or database names as specified in the module. If the database declaration is omitted then the transaction function is avaiable for any database. This declaration allows you to run your application with configurations using different databases but sharing a common code base.

BEGIN <...instructions...> END

The main processing block starts with

BEGINand ends withEND. It contains all the commands executed when calling this subroutine from another subroutine or a transaction function.

The following pseudocode example shows the parts of a subroutine declaration:

SUBROUTINE <name> ( <parameter name list> ) DATABASE <list of database names> BEGIN ...<instructions>... END

The DATABASE declaration is optional.

A transaction function declaration starts with the Keyword TRANSACTION

followed by the name of the transaction function. This name identifies the

function globally. The body of the function contains the following parts:

AUTHORIZE ( <auth-function>, <auth-resource> )

This optional definition is dealing with authorization and access rights. If the authorization function fails, the transaction function is not executed and returns with error. The <auth-function> references a form function implementing the authorization check. The <auth-resource> is passed as parameter with name 'resource' to the function.

DATABASE <database name list>

This optional definition is restriction the definition and availability of the function to a set of databases. The databases are listed by name separated by comma (','). The names are the database id's defined in your server configuration. If the database declaration is omitted then the transaction function is avaiable for any database. This declaration allows you to run your application with configurations using different databases but sharing a common code base.

RESULT FILTER <post-filter-name>

This optional declaration defines a function applied as post filter to the transaction function. The idea is that you might want to return a structure as result that cannot be built by TDL. For example a recursive structure like a tree. The result filter function is called with the structure printed by the main processing block (BEGIN .. END) and the result of the filter function is returned to the caller instead.

PREPROC <...instructions...> ENDPROC

This optional block contains instructions on the transaction function input. The result of these preprocessing instructions are put into the input structure, so that they can be referenced in the main code definition block of the transaction. We can call any global normalization or form function in the preprocessing block to enrich or transform the input to process.

BEGIN <...instructions...> END

The main processing block starts with

BEGINand ends withEND. It contains all the database instructions needed for completing this transaction.AUDIT [CRITICAL] <funcname...> WITH BEGIN <...instructions...> END

This optional block specifies a function that is executed at the end of a transaction. The input of the function is the structure built from the output of the instructions block. If CRITICAL is specified then the transaction fails (rollback) if the audit function fails. Otherwise there is just the error of the audit function logged, but the transaction is completed (commit). You can specify several audit functions. The variables in the instructions block refer to the scope of the main processing block. So you can reference everything that is referencable after the last instruction of the main processing block.

AUDIT [CRITICAL] <funcname...> ( <...parameter...> )

If the input structure of the audit function is just one parameter list this alternative syntax for an audit function declaration can be used. You simply specify the audit function call after the AUDIT or optionally after the CRITICAL keyword.

The following pseudo code snippet shows the explained building blocks in transaction functions together:

TRANSACTION <name> AUTHORIZE ( <auth-function>, <auth-resource> ) DATABASE <list of database names> RESULT FILTER <post-filter-name> PREPROC ...<preprocessing instructions>... ENDPROC BEGIN ...<instructions>... END AUDIT CRITICAL <funcname> ( ...<parameter>... )

The lines with AUTHORIZE,DATABASE and RESULT FILTER are optional. So is the preprocessing block PREPROC..ENDPROC. A simpler transaction function looks like the following:

TRANSACTION <name> BEGIN ...<instructions>... END

Main processing instructions defined in the main execution block of a subroutine or transaction function consist of three parts in the following order terminated by a semicolon ';' (the order of the INTO and FOREACH expression can be switched):

INTO <result substructure name>

This optional directive specifies if and where the results of the database commands should be put into as part of the function output. In subroutines this substructure is relative to the current substructure addressed in the callers context. For example a subroutine with an "INTO myres" directive in a block of an "INTO output" directive will write its result into a substructure with path "output/myres".

FOREACH <selector>

This optional directive defines the set of elements on which the instruction is executed one by one. Specifying a set of two elements will cause the function to be called twice. An empty set as selection will cause the instruction to be ignored. Without quantifier the database command or subroutine call of the instruction will be always be executed once.

The argument of the FOREACH expression is either a reference to the result of a previous instruction or a path selecting a set of input elements.

Results of previous instructions are referenced either with the keyword RESULT referring to the result set of the previous command or with a variable naming a result set declared with this name before.

Input elements are selected by path relative to the path currently selected, starting from the input root element when entering a transaction function. The current path selected and the base element of any relative path calculated in this scope changes when a subroutine is called in a FOREACH selection context. For example calling a subroutine in a 'FOREACH person' context will cause relative paths in this subroutine to be sub elements of 'person'.

DO <command>

Commands in an instruction are either embedded database commands or subroutine calls. Command arguments are either constants or relative paths from the selector path in the FOREACH selection or referring to elements in the result of a previous command. If an argument is a relative path from the selector context, its reference has to be unique in the context of the element selected by the selector. If an argument references a previous command result it must either be unique or dependent an the FOREACH argument. Results that are sets with more than one element can only be referenced if they are bound to the FOREACH quantifier.

The following example illustrate how the FOREACH,INTO,DO expressions in the main processing block work together:

TRANSACTION insertCustomerAddresses

BEGIN

DO SELECT id FROM Customer

WHERE name = $(customer/name);

FOREACH /customer/address

DO INSERT INTO Address (id,address)

VALUES ($RESULT.id, $(address));

END

Preprocessing instructions defined in the PREPROC execution block of a transaction function consist similar to the instructions in the main execution block of three parts in the following order terminated by a semicolon ';' (the order of the INTO and FOREACH expression can be switched and has no meaning, e.g. FOREACH..INTO == INTO..FOREACH):

INTO <result substructure name>

This optional directive specifies if and where the results of the preprocessing commands should be put into as part of the input to be processed by the main processing instructions. The relative paths of the destination structure are calculated relative to a FOREACH selection element.

FOREACH <selector>

This optional directive defines the set of elements on which the instruction is executed one by one. The preprocessing command is executed once for each element in the selected set and it will not be executed at all if the selected set is empty.

DO <command>

Commands in an instruction are function calls to globally defined form functions or normalization functions. Command arguments are constants or relative paths from the selector path in the FOREACH selection. They are uniquely referencing elements in the context of a selected element.

The following example illustrate how the "FOREACH, INTO, DO" expressions in the main processing block work together:

TRANSACTION insertPersonTerms

PREPROC

FOREACH //address/* INTO normalized

DO normalizeStructureElements(.);

FOREACH //id INTO normalized

DO normalizeNumber(.);

ENDPROC

BEGIN

DO UNIQUE SELECT id FROM Person

WHERE name = $(person/name);

FOREACH //normalized DO

INSERT INTO SearchTerm (id, value)

VALUES ($RESULT.id, $(.));

END

An element of the input or a set of input elements can be selected by a path. A path is a sequence of one of the following elements separated by slashes:

Identifier

An identifier uniquely selects a sub element of the current position in the tree.

*

Anp asterisk selects any sub element of the current position in the tree.

..

Two dots in a row select the parent element of the current position in the tree.

.

One dots selects the current element in the tree. This operator can also be useful as part of a path to force the expression to be interpreted as path if it could also be interpreted as a keyword of the TDL language (for example

./RESULT).

A slash at the beginning of a path selects the root element of the transaction function input tree. Two subsequent slashes express that the following node is (transitively) any descendant of the current node in the tree.

Paths can appear as argument of a FOREACH selector where they specify the set of elements on which the attached command is executed on. Or they can appear as reference to an argument in a command expression where they specify uniquely one element that is passed as argument to the command when it is executed.

When used in embedded database statements, selector paths are referenced

with $(<path expression>). When used as database

function or subroutine call arguments path expressions can be used in